A quick overview in our industry

I'm in the IT industry, particularly in Android for roughly 10 years now, and one thing I can tell with great confidence is that this industry moves incredibly fast. Looking back all these years, it's been an extraordinary evolution of the OS itself, as well as the community around it. However, sometimes it feels like our industry lacks a lot of good practices and discipline in the development process, a lack of professionalism. Why am I saying this? - During all these years I've witnessed developers, colleagues, and entire companies struggling to establish some good practices around the software development processes they were doing. While I was at the secondary school we were learning about algorithms and for the first time I got in touch with some programming languages. Then, I continued studying programming languages at university. Neither of them taught the students some sort of good practices and discipline. We learned how to use the languages, and how to construct small programs to solve Fibonacci, Factorial, String manipulation and so on, but we weren't taught what is expected from us as professionals and how important it would be for our potential future customers our professionalism. Probably part of the problem lays in the fact that our industry is quite young and the professors were not having the required experience and disciplines themselves. Likely, we are organizing ourselves into communities and we share and learn from each other, and as the time passes everything gets better and better.

Software testing

It is quite unfortunate in 2019 to see a situation where a software development team splits in a way the work into something like production code implementation and writing tests. It's sad that some people still ask for additional time (or sort of calculate additional time) in their estimations that will be dedicated for writing tests. I am happy to see this significantly reduced in the last years, but it still does exist. It was happening quite a lot some 10 years ago, and frankly, that's very sad. In my opinion, writing the tests shouldn't be different or separate from writing the production code. I see the tests as construction around the code that will: make sure the code behaves the way we expect it to, and make sure that changes on the expected behavior will be detected when the code gets edited in the future. Furthermore, the tests will make sure that when we get back to the code after a while, they will describe the intentions and the expected behavior of the code, and will make it easier to understand what's going on. When I mentioned earlier about the lack of good practices and discipline, about the things which are not taught at school/university - this is one of them. We weren't taught at school how important the software testing is, and more importantly, we weren't taught that testing has to be part of our job and that it is our responsibility! We are getting paid reasonably good money to do our job right, and making sure what we do meets the expectations is absolutely part of our job and our own responsibility!

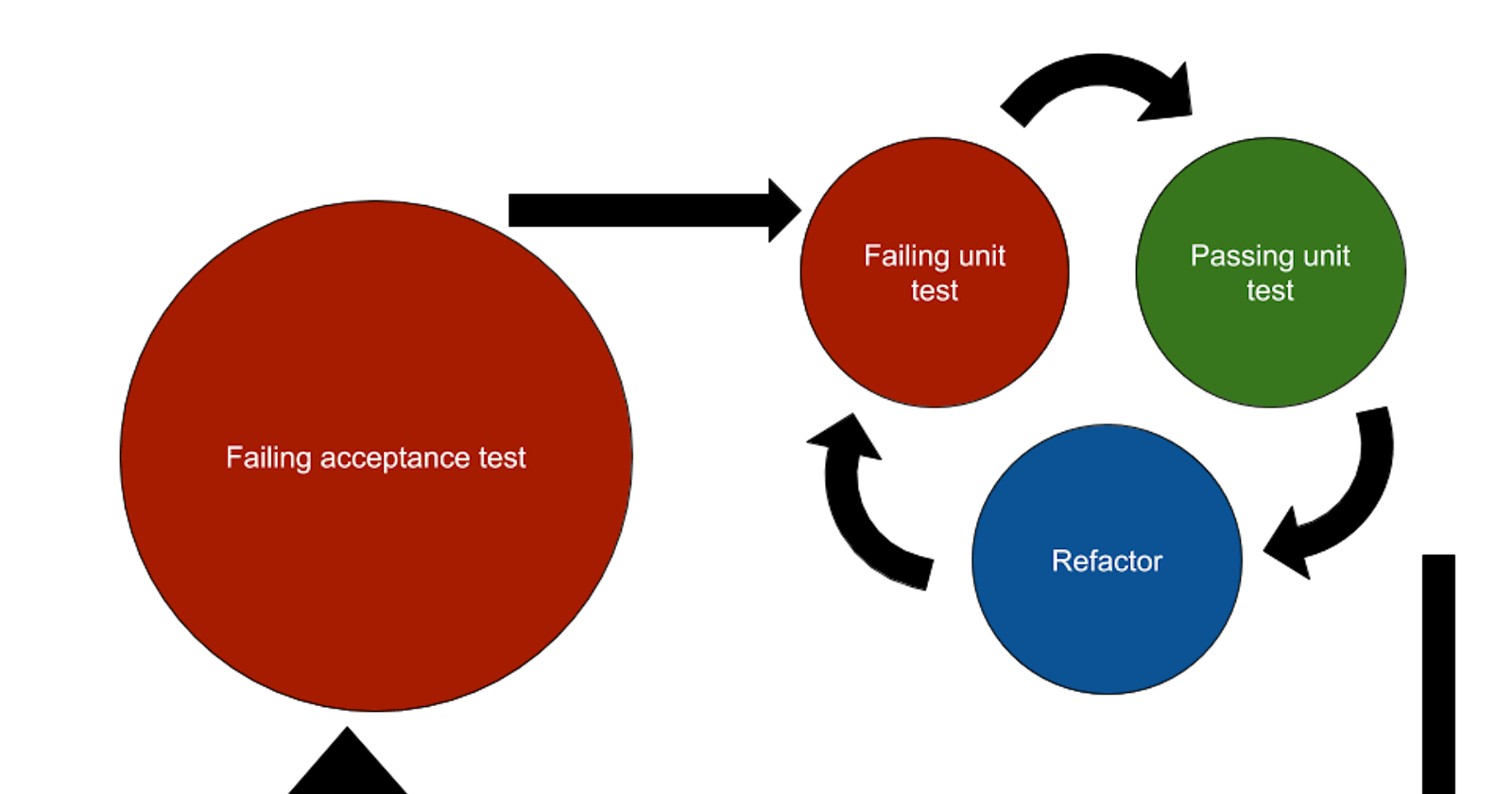

Test-Driven Development

There are quite a lot of discussions and arguments about the TDD in particular. There are quite some strong opinions on that topic as well. Here I would like to describe the way I look at it, and the way I approach the software development. At the very beginning, I would like to emphasize that not all code is testable. There are situations when some parts of the code are out of our control. I am striving to guard the code that is under my control from the code that is not, and that way I am working out a way to get the parts under my control tested. Writing tests after the implementation simply doesn't work for me. There are situations when I don't know what are my options and possibilities and I may try out something quickly, but once I understand what do I want to do, I delete those changes and start all over by writing the test first. Writing tests after the implementation works for many people, and that's fine. I am not against it, because writing them afterward is better than not writing tests at all. However, writing tests after the implementation doesn't work for me. At this point, I am quite used to the TDD so I am not thinking much about different approaches. Before I started practicing it, in most of the cases, I would find myself struggling to catch some little regressions which were not visible and handled during the implementation. It was hard and sometimes impossible to foresee them. On the other side, once implementation was done I was tending to write tests for the positive, sometimes for the positive and negative, and rarely getting to the edge case flows. Again, this is not always true, and it's not happening to everyone, but I'm sure it happens to many if not the majority. I'm just seeing it happening. I've seen situations when people introduce weird hacks to make some parts testable, which would haven't been done if the tests were written up front. I've seen people writing tests for the sake of writing them, just write something without much attention and move on. Eventually, it ends up without anything valuable coming out from the tests.

Code Coverage

Many times I've seen people striving for 100% code coverage. For some people, that's even a requirement from the management. This is very wrong. It's wrong because if there is a function which returns a result, and if that function is being called, the code coverage tool will show it as covered. However, the function may produce different output for a given input. So what's the value of having the coverage, if the actual behavior is not checked for being right? The way I use the code coverage tool is while refactoring, especially if I have to refactor a code which is not tested. Before I start refactoring a piece of code, I would write tests and run them with the code coverage tool to make sure I cover the parts I want to refactor. Then I refactor the code with confidence because if I broke something the tests will tell me.

The Screencast

Finally, I gathered the courage to do something I wanted to do for quite some time now. I recorded a screencast where I am demonstrating the approach I perform my daily job. This is an approach I am following for quite a while now. The general approach stays the same: I start by writing a test, followed by writing the assertions where I write what I want to read, then I make it compile and finally I write the implementation to satisfy the test. Of course, there are always some small things here and there which are constantly changing and improving. For example, right after recording the screencast I read this nice article about mocking coroutines by Danny Preussler and I learned about the verifyBlocking and the onBlocking concepts, which is something I will start using from now on. At the very beginning of my journey with TDD, it was way different than what it is now. But like with everything, the more you practice, the better and the more confident you become, and the new and easier techniques you develop. Here is a playlist of YouTube videos where I demonstrate the TDD, please check it out:

Final thoughts

The most important thing for every one of us is to do what we love to do and feel great joy doing it. I found out that what I demonstrated in that video makes me very happy and it's very joyful for me. I inspired many people inside the organizations I used to work, the same way I got and still keep getting inspired every day. This clearly works for me. You can give a try and see if that works for you too. If it doesn't - all good. Stick to what works for you. My hopes are that this article together with the video will be useful for many people, and many of them will get motivated and inspired, or, at least something new or something useful will come out from it.